Use Pre-Trained Tensorflow Models with a GPU in SAP AI Core

- How to sync pre-trained model to SAP AI Core

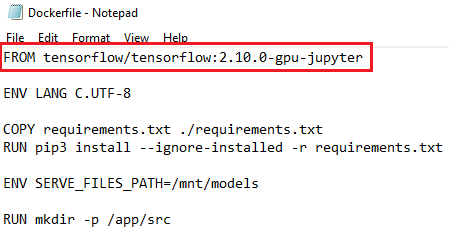

- How to write a pipeline and necessary docker code to enable GPU deployment.

- How to deploy an AI model and use it for online inferencing.

Prerequisites

- A BTP global account

If you are an SAP Developer or SAP employee, please refer to the following links ( for internal SAP stakeholders only ) -

How to create a BTP Account (internal)

SAP AI Core

If you are an external developer or a customer or a partner kindly refer to this tutorial - You have set up an Enterprise SAP BTP Account for Tutorials. Follow the instructions to get an account, and set up entitlements and service instances for SAP AI Core.

- You have set up your Git Repository with SAP AI Core.

- You have created docker registry secret in SAP AI Core

Pre-read

All the required files are available for download in the specified step, so that you can easily complete the tutorial.

This tutorial demonstrates a use-case in which you have trained an ML model on your local computer and would like to deploy this model to production with SAP AI Core.

The model used in this example uses a pre-trained embedding layer of GloVe followed by custom stack of neural network layers for fine tuning. Please find the following tutorials used as reference for the same.

By the end of this tutorial you will have a movie review classification model deployed in SAP AI Core, using which you can make predictions in real-time.

You may complete each step of this tutorial using any of the following tools to control operations in your SAP AI Core:

- Postman

- SAP AI Core SDK (demonstrated below)

- AI API client SDK

Please complete the prerequisites before you get started.